Hyper-threading and CPU time

When is a CPU second not a CPU second? When you are running with hyper-threading (aka HT, HTT, Symmetric Multi-Threading (SMT), etc) enabled.

Hyper-threading turns a single physical CPU core into two (or more) logical CPU cores, allowing two (or more) user threads to execute simultaneously, sharing the resources (execution units, register file, cache, etc) of the physical CPU. Given this sharing, the performance achieved from a hyper-threaded core is not double that of a physical core. Additionally, the "latency" of a thread on a busy hyper-threaded core is higher; user threads will use more CPU to complete a given workload. The advantage of hyper-threading is increasing overall throughput - but the increase in throughput is highly workload dependant.

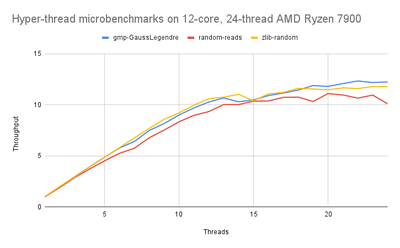

Running microbenchmarks, the throughput increase achieved by hyper-threading is minimal - of the three given algorithms, all have relatively small code, should fit well in the cache hierarchy, and saturates CPU core execution pipelines.

Workloads that have much larger execution memory footprints, large code footprints, exceed cache sizes and frequently stall execution pipelines for memory accesses, will achieve more throughput increase with hyper-threading; a CPUs resources may be utilised by the second thread when the first thread stalls for memory accesses.

This can be seen on large compiles - note that when utilising hyper-threading, the compile wall-time decreased by around 13%, but the overall CPU time increased significantly, by around 55%. This was run an an AMD Ryzen 7900 with 12 cores, 24 threads, running NetBSD-10.

sh$ time make -j 12

414.17s real 3896.00s user 334.77s system

sh$ time make -j 24

361.43s real 6024.00s user 475.59s system

Below are simple demonstrations of the impact of hyper-threading:

NetBSD 4.0 on a Pentium 4

The system here has a "Intel(R) Pentium(R) 4 CPU 2.80GHz", single core (one "physical" CPU) with hyper-threading enabled (giving two "logical" CPUs), running NetBSD 4.0 with an SMP kernel. We run a deterministic unit of work on an idle system:

ksh$ time gzip -9 < zz > /dev/null

10.28s real 10.05s user 0.24s system

ksh$ time gzip -9 < zz > /dev/null

10.26s real 10.05s user 0.20s system

ksh$ time gzip -9 < zz > /dev/null

10.31s real 10.08s user 0.23s system

The times are fairly consistent, and, roughly, real = user + sys. Next we add an arbitrary load to the system. We assume the kernel will now schedule each thread on each logical CPU, and it is then up to the CPU's hyper-threading algorithm how the instructions are scheduled on the single physical core.

ksh$ perl -e 'while(1){}' &

[1] 9382

ksh$ time gzip -9 < zz > /dev/null

15.36s real 14.96s user 0.36s system

ksh$ time gzip -9 < zz > /dev/null

15.49s real 14.97s user 0.34s system

ksh$ time gzip -9 < zz > /dev/null

15.41s real 14.95s user 0.37s system

OK, so what has happened here? The real time has increased by about 50%, but so has the user time. On the same system with hyper-threading disabled, you would expect the user time to remain about the same, and the real time to approximately double. Here, because both threads are really sharing the same core and its resources, they tend to compete and slow each other down. However, as the real time has not doubled, the overall throughput of the system has increased over the uni-processor case.

Also, adding more load only increases the real time, as only two threads can ever be executed in parallel.

ksh$ perl -e 'while(1){}' &

[2] 12480

ksh$ perl -e 'while(1){}' &

[3] 29686

ksh$ perl -e 'while(1){}' &

[4] 12019

ksh$ time gzip -9 < zz > /dev/null

38.14s real 15.12s user 0.33s system

ksh$ time gzip -9 < zz > /dev/null

34.45s real 15.11s user 0.25s system

ksh$ time gzip -9 < zz > /dev/null

37.96s real 15.04s user 0.34s system

This is where a common metric may be found: Cycles Per Instruction (CPI), occasionally also referred to by its inverse, Instructions Per Cycle (IPC). In the hyper-threading example above, when the second hyper-thread was busy, the CPU took more cycles to execute the same set of instructions. If we were to measure the CPI of gzip, we would have seen an increase, roughly proportional to the user CPU time increase.

For reference, the CPU tested was:

cpu0: Intel Pentium 4 (686-class), 2798.79 MHz, id 0xf25

cpu0: features 0xbfebfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR>

cpu0: features 0xbfebfbff<PGE,MCA,CMOV,PAT,PSE36,CFLUSH,DS,ACPI,MMX>

cpu0: features 0xbfebfbff<FXSR,SSE,SSE2,SS,HTT,TM,SBF>

cpu0: features2 0x4400<CID,xTPR>

cpu0: "Intel(R) Pentium(R) 4 CPU 2.80GHz"

cpu0: I-cache 12K uOp cache 8-way, D-cache 8KB 64B/line 4-way

cpu0: L2 cache 512KB 64B/line 8-way

cpu0: ITLB 4K/4M: 64 entries

cpu0: DTLB 4K/4M: 64 entries

cpu0: Initial APIC ID 1

cpu0: Cluster/Package ID 0

cpu0: SMT ID 1

cpu0: family 0f model 02 extfamily 00 extmodel 00

Linux 2.6 on a Xeon X5650

Second test, on Linux 2.6.38 on a 6-physical core Xeon (Intel(R) Xeon(R) CPU X5650 @ 2.67GHz). We use taskset to select which cores we're going to run these processes on:

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

11.27user 0.07system 0:11.34elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

11.18user 0.01system 0:11.19elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+230minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

11.21user 0.05system 0:11.26elapsed 99%CPU (0avgtext+0avgdata 2928maxresident)k

0inputs+0outputs (0major+228minor)pagefaults 0swaps

Start a CPU burning thread on the second thread on that core, and retest:

bash$ taskset -c 11 perl -e 'while(1){}' &

[1] 4391

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.90user 0.09system 0:17.00elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.80user 0.03system 0:16.84elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+230minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.71user 0.07system 0:16.79elapsed 99%CPU (0avgtext+0avgdata 2928maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

And just to complete our set of tests:

bash$ taskset -c 11 perl -e 'while(1){}' &

[2] 4730

bash$ taskset -c 11 perl -e 'while(1){}' &

[3] 4731

bash$ taskset -c 11 perl -e 'while(1){}' &

[4] 4734

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.66user 0.06system 0:16.73elapsed 99%CPU (0avgtext+0avgdata 2928maxresident)k

0inputs+0outputs (0major+228minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.60user 0.07system 0:16.68elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.71user 0.08system 0:16.80elapsed 99%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

Whoa, what happened here? Since we're selecting each virtual core to run on explicitly, the second virtual core now has 4 threads (perl) running on it, while the first virtual core only gets the gzip. For a matching test to the NetBSD case, we could do:

bash$ taskset -c 5,11 perl -e 'while(1){}' &

[1] 4966

bash$ taskset -c 5,11 perl -e 'while(1){}' &

[2] 4969

bash$ taskset -c 5,11 perl -e 'while(1){}' &

[3] 4970

bash$ taskset -c 5,11 perl -e 'while(1){}' &

[4] 4972

bash$ taskset -c 5,11 time gzip -9 < /tmp/zz > /dev/null

16.63user 0.04system 0:42.45elapsed 39%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

bash$ taskset -c 5,11 time gzip -9 < /tmp/zz > /dev/null

16.72user 0.11system 0:42.89elapsed 39%CPU (0avgtext+0avgdata 2944maxresident)k

0inputs+0outputs (0major+229minor)pagefaults 0swaps

bash$ taskset -c 5,11 time gzip -9 < /tmp/zz > /dev/null

16.83user 0.08system 0:43.64elapsed 38%CPU (0avgtext+0avgdata 2928maxresident)k

0inputs+0outputs (0major+228minor)pagefaults 0swaps

NetBSD 7.0 on Intel Core i7 (Sandy Bridge)

And a more modern example on NetBSD, on a Intel(R) Core(TM) i7-2600 CPU @ 3.40GHz, first a baseline:

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

10.37 real 10.06 user 0.30 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

10.37 real 10.17 user 0.18 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

10.40 real 10.08 user 0.28 sys

With a single spinning process:

ksh$ sudo schedctl -A 3,7 perl -e 'while(1){}' &

[1] 20565

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

14.63 real 13.69 user 0.21 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

14.46 real 14.24 user 0.22 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

14.46 real 14.26 user 0.20 sys

And now with 3 more spinning processes:

ksh$ sudo schedctl -A 3,7 perl -e 'while(1){}' &

[2] 19974

ksh$ sudo schedctl -A 3,7 perl -e 'while(1){}' &

[3] 25182

ksh$ sudo schedctl -A 3,7 perl -e 'while(1){}' &

[4] 27197

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

32.05 real 14.22 user 0.29 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

28.45 real 14.22 user 0.27 sys

ksh$ sudo schedctl -A 3,7 time gzip -9 < /tmp/zz > /dev/null

38.47 real 14.28 user 0.21 sys

All pretty much as expected. Single thread latency increases about 36%, for a multi-threaded instruction throughput increase of around 47%.

For another test, we'll compute the SHA1 of a 4GiB file cached in RAM, use the same command as the busy process keeping the other hyper-thread busy, and bind only the single logical core to each:

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

10.52 real 6.58 user 3.90 sys

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

10.39 real 6.56 user 3.81 sys

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

10.35 real 6.41 user 3.90 sys

ksh$ sudo schedctl -A 7 sh -c 'while :; do openssl sha1 < zz > /dev/null; done' &

[1] 2406

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

16.40 real 12.56 user 3.82 sys

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

16.33 real 12.50 user 3.82 sys

ksh$ sudo schedctl -A 3 time openssl sha1 < /tmp/zz > /dev/null

16.44 real 12.44 user 3.98 sys

For reference, the CPU is:

ksh$ sudo cpuctl identify 3

cpu3: highest basic info 0000000d

cpu3: highest extended info 80000008

cpu3: "Intel(R) Core(TM) i7-2600 CPU @ 3.40GHz"

cpu3: Intel Xeon E3-12xx, 2nd gen i7, i5, i3 2xxx (686-class), 3392.45 MHz

cpu3: family 0x6 model 0x2a stepping 0x7 (id 0x206a7)

cpu3: features 0xbfebfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE>

cpu3: features 0xbfebfbff<MCA,CMOV,PAT,PSE36,CFLUSH,DS,ACPI,MMX,FXSR,SSE,SSE2>

cpu3: features 0xbfebfbff<SS,HTT,TM,SBF>

cpu3: features1 0x1fbae3ff<SSE3,PCLMULQDQ,DTES64,MONITOR,DS-CPL,VMX,SMX,EST>

cpu3: features1 0x1fbae3ff<TM2,SSSE3,CX16,xTPR,PDCM,PCID,SSE41,SSE42,X2APIC>

cpu3: features1 0x1fbae3ff<POPCNT,DEADLINE,AES,XSAVE,OSXSAVE,AVX>

cpu3: features2 0x28100800<SYSCALL/SYSRET,XD,RDTSCP,EM64T>

cpu3: features3 0x1<LAHF>

cpu3: xsave features 0x7<x87,SSE,AVX>

cpu3: xsave instructions 0x1<XSAVEOPT>

cpu3: xsave area size: current 832, maximum 832, xgetbv enabled

cpu3: enabled xsave 0x7<x87,SSE,AVX>

cpu3: I-cache 32KB 64B/line 8-way, D-cache 32KB 64B/line 8-way

cpu3: L2 cache 256KB 64B/line 8-way

cpu3: L3 cache 8MB 64B/line 16-way

cpu3: 64B prefetching

cpu3: ITLB 64 4KB entries 4-way, 2M/4M: 8 entries

cpu3: DTLB 64 4KB entries 4-way, 2M/4M: 32 entries (L0)

cpu3: L2 STLB 512 4KB entries 4-way

cpu3: Initial APIC ID 6

cpu3: Cluster/Package ID 0

cpu3: Core ID 3

cpu3: SMT ID 0

cpu3: DSPM-eax 0x77<DTS,IDA,ARAT,PLN,ECMD,PTM>

cpu3: DSPM-ecx 0x9<HWF,EPB>

cpu3: SEF highest subleaf 00000000

cpu3: microcode version 0x23, platform ID 1

Linux 3.13 on Xeon E5-1650

Slightly more modern CPU:

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

12.06user 0.08system 0:12.16elapsed 99%CPU (0avgtext+0avgdata 812maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

12.03user 0.06system 0:12.11elapsed 99%CPU (0avgtext+0avgdata 812maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

12.23user 0.06system 0:12.31elapsed 99%CPU (0avgtext+0avgdata 812maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

Busying the other hyper-thread core:

bash$ taskset -c 11 perl -e 'while(1){}' &

[1] 15995

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

17.02user 0.07system 0:17.12elapsed 99%CPU (0avgtext+0avgdata 812maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.92user 0.09system 0:17.04elapsed 99%CPU (0avgtext+0avgdata 808maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

16.82user 0.09system 0:16.94elapsed 99%CPU (0avgtext+0avgdata 808maxresident)k

0inputs+0outputs (0major+253minor)pagefaults 0swaps

So, in this very primitive test, about a 40% increase in CPU (equating to single-thread latency), which also means approx 43% increase in overall throughput [math]\displaystyle{ ({2}/{1.4}) }[/math] by enabling hyper-threading (overall instruction throughput by multiple threads).

CPU for this test was:

Intel(R) Xeon(R) CPU E5-1650 v2 @ 3.50GHz.

Linux 6.5.13 on AMD Ryzen Threadripper PRO 3995WX

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

10.61user 0.06system 0:10.67elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+210minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

10.56user 0.05system 0:10.62elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+211minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

10.51user 0.07system 0:10.59elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+210minor)pagefaults 0swaps

vs

bash$ taskset -c 69 perl -e 'while(1){}' &

[1] 2971374

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

13.26user 0.04system 0:13.31elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+207minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

13.23user 0.06system 0:13.30elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+211minor)pagefaults 0swaps

bash$ taskset -c 5 time gzip -9 < /tmp/zz > /dev/null

13.20user 0.05system 0:13.26elapsed 99%CPU (0avgtext+0avgdata 1024maxresident)k

0inputs+0outputs (0major+209minor)pagefaults 0swaps

On this CPU, we see a 25.6% increase in CPU time, equating to almost a 60% throughput increase; a great improvement over older CPUs, at this very specific workload.

NetBSD 10.1 on AMD Ryzen 9 7900 12-Core Processor

New machine, new numbers:

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

10.18 real 10.11 user 0.07 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

10.18 real 10.09 user 0.08 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

10.17 real 10.11 user 0.06 sys

ksh$ schedctl -A 11,23 perl -e 'while(1){}' &

[1] 23566

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

13.17 real 13.08 user 0.09 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

13.36 real 13.28 user 0.08 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

13.36 real 13.23 user 0.12 sys

Around a 30% increase in CPU time, for a ~54% throughput increase.

ksh$ schedctl -A 11,23 perl -e 'while(1){}' &

[2] 22188

ksh$ schedctl -A 11,23 perl -e 'while(1){}' &

[3] 12941

ksh$ schedctl -A 11,23 perl -e 'while(1){}' &

[4] 6156

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

25.36 real 13.22 user 0.10 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

25.35 real 13.22 user 0.09 sys

ksh$ schedctl -A 11,23 time gzip -9 < /tmp/zz > /dev/null

25.23 real 13.24 user 0.10 sys

Which remains consistent with more load.

CPU is:

cpu0: highest basic info 00000010

cpu0: highest extended info 80000028

cpu0: "AMD Ryzen 9 7900 12-Core Processor "

cpu0: AMD Family 19h (686-class), 3693.07 MHz

cpu0: family 0x19 model 0x61 stepping 0x2 (id 0xa60f12)

cpu0: features 0x178bfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE>

cpu0: features 0x178bfbff<MCA,CMOV,PAT,PSE36,CLFSH,MMX,FXSR,SSE,SSE2,HTT>

cpu0: features1 0x7ed8320b<SSE3,PCLMULQDQ,MONITOR,SSSE3,FMA,CX16,SSE41,SSE42>

cpu0: features1 0x7ed8320b<MOVBE,POPCNT,AES,XSAVE,OSXSAVE,AVX,F16C,RDRAND>

cpu0: features2 0x2fd3fbff<SYSCALL/SYSRET,NOX,MMXX,MMX,FXSR,FFXSR,P1GB,RDTSCP>

cpu0: features2 0x2fd3fbff<LONG>

cpu0: features3 0x75c237ff<LAHF,CMPLEGACY,SVM,EAPIC,ALTMOVCR0,LZCNT,SSE4A>

cpu0: features3 0x75c237ff<MISALIGNSSE,3DNOWPREFETCH,OSVW,IBS,SKINIT,WDT,TCE>

cpu0: features3 0x75c237ff<TopoExt,PCExtC,PCExtNB,DBExt,L2IPERFC,MWAITX>

cpu0: features3 0x75c237ff<AddrMaskExt>

cpu0: features5 0xf1bf97a9<FSGSBASE,BMI1,AVX2,SMEP,BMI2,ERMS,INVPCID,QM,PQE>

cpu0: features5 0xf1bf97a9<AVX512F,AVX512DQ,RDSEED,ADX,SMAP,AVX512_IFMA>

cpu0: features5 0xf1bf97a9<CLFLUSHOPT,CLWB,AVX512CD,SHA,AVX512BW,AVX512VL>

cpu0: features6 0x405fce<AVX512_VBMI,UMIP,PKU,AVX512_VBMI2,CET_SS,GFNI,VAES>

cpu0: features6 0x405fce<VPCLMULQDQ,AVX512_VNNI,AVX512_BITALG,AVX512_VPOPCNTDQ>

cpu0: features6 0x405fce<MAWAU=0,RDPID>

cpu0: xsave features 0x2e7<x87,SSE,AVX,Opmask,ZMM_Hi256,Hi16_ZMM,PKRU>

cpu0: xsave instructions 0xf<XSAVEOPT,XSAVEC,XGETBV,XSAVES>

cpu0: xsave area size: current 2432, maximum 2440, xgetbv enabled

cpu0: enabled xsave 0xe7<x87,SSE,AVX,Opmask,ZMM_Hi256,Hi16_ZMM>

cpu0: I-cache: 32KB 64B/line 8-way, D-cache: 32KB 64B/line 8-way

cpu0: L2 cache: 1MB 64B/line 8-way

cpu0: L3 cache: 32MB 64B/line 16-way

cpu0: ITLB: 64 4KB entries fully associative, 64 2MB entries fully associative, 64 1GB entries fully associative

cpu0: DTLB: 72 4KB entries fully associative, 72 2MB entries fully associative, 72 1GB entries fully associative

cpu0: L2 ITLB: 512 4KB entries 4-way, 512 2MB entries 2-way

cpu0: L2 DTLB: 3072 4KB entries 8-way, 3072 2MB entries 6-way, 64 1GB entries fully associative

cpu0: Initial APIC ID 0

cpu0: Cluster/Package ID 0

cpu0: Core ID 0

cpu0: SMT ID 0

cpu0: MONITOR/MWAIT extensions 0x3<EMX,IBE>

cpu0: monitor-line size 64

cpu0: C0 substates 1

cpu0: C1 substates 1

cpu0: DSPM-eax 0x4<ARAT>

cpu0: DSPM-ecx 0x1<HWF,NTDC=0>

cpu0: SEF highest subleaf 00000001

cpu0: SEF-subleaf1-eax 0x20<AVX512_BF16>

cpu0: Power Management features: 0x6799<TS,TTP,HTC,HWP,ITSC,CPB,EffFreq,CONNSTBY,RAPL>

cpu0: AMD Extended features 0x791ef257<CLZERO,IRPERF,XSAVEERPTR,RDPRU,MBE>

cpu0: AMD Extended features 0x791ef257<WBNOINVD,IBPB,INT_WBINVD,IBRS,STIBP>

cpu0: AMD Extended features 0x791ef257<STIBP_ALWAYSON,PREFER_IBRS>

cpu0: AMD Extended features 0x791ef257<IBRS_SAMEMODE,EFER_LSMSLE_UN,SSBD,CPPC>

cpu0: AMD Extended features 0x791ef257<PSFD,BTC_NO,IBPB_RET>

cpu0: AMD Extended features2 0x62fcf<NoNestedDataBp>

cpu0: AMD Extended features2 0x62fcf<FsGsKernelGsBaseNonSerializing>

cpu0: AMD Extended features2 0x62fcf<LfenceAlwaysSerialize,SmmPgCfgLock>

cpu0: AMD Extended features2 0x62fcf<NullSelectClearsBase,UpperAddressIgnore>

cpu0: AMD Extended features2 0x62fcf<AutomaticIBRS,NoSmmCtlMSR,FSRS,FSRC>

cpu0: AMD Extended features2 0x62fcf<PrefetchCtlMSR,CpuidUserDis,EPSF>

cpu0: RAS features 0x3b<OVFL_RECOV,SUCCOR,MCAX>

cpu0: SVM Rev. 1

cpu0: SVM NASID 32768

cpu0: SVM features 0x1ebfbcff<NP,LbrVirt,SVML,NRIPS,TSCRate,VMCBCleanBits>

cpu0: SVM features 0x1ebfbcff<FlushByASID,DecodeAssist,PauseFilter,B11>

cpu0: SVM features 0x1ebfbcff<PFThreshold,AVIC,V_VMSAVE_VMLOAD,VGIF,GMET>

cpu0: SVM features 0x1ebfbcff<x2AVIC,SSSCHECK,SPEC_CTRL,ROGPT>

cpu0: SVM features 0x1ebfbcff<HOST_MCE_OVERRIDE,VNMI,IBSVIRT>

cpu0: SVM features 0x1ebfbcff<ExtLvtOffsetFaultChg,VmcbAddrChkChg>

cpu0: IBS features 0xbff<IBSFFV,FetchSam,OpSam,RdWrOpCnt,OpCnt,BrnTrgt>

cpu0: IBS features 0xbff<OpCntExt,RipInvalidChk,OpBrnFuse,IbsFetchCtlExtd>

cpu0: IBS features 0xbff<IbsL3MissFiltering>

cpu0: Encrypted Memory features 0x1<SME>

cpu0: Perfmon: 0x7<PerfMonV2,LbrStack,LbrAndPmcFreeze>

cpu0: Perfmon: counters: Core 6, Northbridge 16, UMC 8

cpu0: Perfmon: LBR Stack 16 entries

cpu0: UCode version: 0xa60120c

Additional

In truth, similar effects can be seen with other shared resources, just not as easily. Some examples include shared L2/L3 caches, and memory bandwidth. Both may increase the CPU time required for a given unit of work.